Introduction In the digital era, data is the foundation of decision-making. Whether you're a startup analyzing competitors, a researcher compiling

Introduction

In the digital era, data is the foundation of decision-making. Whether you’re a startup analyzing competitors, a researcher compiling statistics, or an enterprise tracking product availability, access to high-quality structured data is essential. But data isn’t always readily available through APIs or official channels.

That’s where lists crawlers come into play.

A lists crawler is an automated tool designed to detect and extract structured information presented in list formats from websites. These crawlers navigate the web, locate recurring data blocks, and collect content like product listings, job ads, directory entries, or reviews—at scale and with speed.

This article explores the technical mechanics, business use cases, implementation strategies, and ethical considerations of lists crawlers. By the end, you’ll understand how they work, why they matter, and how to use them effectively.

What Exactly Is a Lists Crawler?

A lists crawler is a specialized type of web crawler (or scraper) that focuses on repetitive, structured data on websites. Unlike broad crawlers that attempt to index all content, lists crawlers are optimized to identify and extract data that appears in formats like:

- HTML lists (<ul>, <ol>)

- Tables (<table>, <tr>, <td>)

- Grid or card layouts (commonly using <div> blocks)

- Paginated search results

- Infinite scroll lists

- JSON responses from dynamic web applications

For example, on an e-commerce website, a lists crawler would target the product grid—extracting the product name, price, image, and link for each item. On a job board, it would pull job titles, companies, and locations from search results.

The goal is automation: collecting useful structured data across many pages or even websites without manual copy-paste work.

Key Features of Lists Crawlers

Here are the core features that distinguish lists crawlers from traditional web crawlers:

- Pattern Recognition

Lists crawlers are trained or programmed to detect repeating HTML patterns that indicate a list of items. - Selective Extraction

They extract only relevant fields from each list item (e.g., title, price, image), not entire pages. - Pagination Handling

Smart lists crawlers detect “Next” buttons or dynamic scrolls to navigate through multiple pages. - Structured Output

Results are exported in structured formats like CSV, JSON, Excel, or fed into databases. - Error Resilience

They can handle unexpected issues—like missing fields, broken pages, or inconsistent formats. - Dynamic Content Support

With headless browser integration, lists crawlers can extract data from JavaScript-heavy pages.

How Lists Crawlers Work: Step-by-Step

To better understand how a lists crawler operates, let’s walk through a simplified process:

1. URL Selection

Choose one or more starting points—usually category or search result pages that contain the lists you want.

2. HTTP Request

The crawler sends a request to load the HTML content of the page. This can include headers to mimic a real browser.

3. Content Parsing

Once the content is fetched, it is parsed into a navigable structure using HTML parsers or DOM libraries.

4. List Detection

The crawler identifies repeating blocks or tags. These are usually cards, rows, or containers that hold similar types of data.

5. Field Extraction

Specific pieces of information (e.g., title, link, price) are extracted using selectors like XPath or CSS queries.

6. Pagination or Scroll

The crawler looks for pagination buttons or loads more content using scroll simulations or JavaScript event triggers.

7. Data Cleaning

Extracted data is normalized—removing duplicates, fixing encodings, trimming white space, and validating formats.

8. Data Output

Final data is stored in your preferred format: JSON, Excel, CSV, SQL database, or cloud storage.

Why Use a Lists Crawler?

1. Saves Time

Collecting large amounts of data manually is slow and tedious. Lists crawlers automate the process in minutes.

2. Improves Accuracy

Automated extraction reduces human error and ensures consistent formatting across data entries.

3. Enables Real-Time Insights

Scheduled crawlers can monitor changes to prices, product availability, or job postings and update your database automatically.

4. Powers Business Intelligence

Crawled data can be analyzed for trends, benchmarking, sentiment analysis, forecasting, and more.

Top Use Cases Across Industries

E-commerce

- Competitor price tracking

- Monitoring stock availability

- Aggregating product reviews

- Creating affiliate marketing feeds

Recruitment & HR

- Aggregating job listings

- Tracking employer hiring trends

- Compiling job market data

Travel & Hospitality

- Monitoring hotel and flight prices

- Scraping reviews or package listings

- Compiling destination guides

Real Estate

- Extracting listings by location, price, or property type

- Monitoring market changes

- Analyzing agents and competitors

Research & Academia

- Gathering structured datasets for academic projects

- Analyzing media, events, or public data

- Creating large corpora for machine learning

Lead Generation & Marketing

- Scraping business directories for contact information

- Extracting social media bios or public profiles

- Compiling niche-specific email lists

How to Build or Use a Lists Crawler

You can either build your own or use ready-made tools depending on your needs.

Option 1: Build Your Own

Technologies You Might Use:

- Python (BeautifulSoup, Scrapy, Selenium)

- JavaScript (Node.js with Puppeteer, Cheerio)

- Go (Colly)

- Headless Browsers (Playwright, Puppeteer)

Sample Code (Python + BeautifulSoup):

python

CopyEdit

import requests

from bs4 import BeautifulSoup

url = ‘https://example.com/products’

headers = {‘User-Agent’: ‘Mozilla/5.0’}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.text, ‘html.parser’)

items = soup.select(‘div.product’)

for item in items:

name = item.select_one(‘h2.name’).text.strip()

price = item.select_one(‘span.price’).text.strip()

print(f'{name} – {price}’)

Option 2: Use a No-Code Tool

No-code scraping platforms often offer:

- Point-and-click list detection

- Built-in pagination handling

- Export to Google Sheets or APIs

- Scheduling and cloud storage

Examples include browser plugins, visual scraping platforms, or enterprise-level data solutions.

Challenges and Limitations

1. Changing Website Structures

If a site redesigns its HTML, your crawler may break. Regular maintenance is needed.

2. Anti-Bot Measures

Sites may block bots using CAPTCHAs, IP blocks, or bot detection systems.

3. Legal Risks

Scraping content that violates terms of service or contains copyrighted/personal data can lead to legal issues.

4. JavaScript Complexity

Sites that load data via JavaScript or APIs may require more advanced tools or browser simulation.

Best Practices and Ethics

- Respect robots.txt: Always check and obey crawl directives.

- Throttle Requests: Use delays and rate limits to avoid overloading servers.

- Avoid Sensitive Data: Do not scrape personal or confidential info without explicit permission.

- Attribute Sources (if republishing): Give credit if using scraped data publicly.

- Stay Compliant: Follow data privacy regulations (GDPR, CCPA, etc.).

Frequently Asked Questions (FAQs)

Q1: What types of websites are best suited for lists crawlers?

Websites with consistent structures and structured content—like product listings, job boards, directories, and classified ads—are ideal for lists crawlers.

Q2: Is it legal to use a lists crawler?

It depends. Publicly accessible data may be legal to crawl, but scraping content that violates a site’s terms of service, includes copyrighted material, or contains personal data can pose legal risks. Always check applicable laws and terms.

Q3: Can a lists crawler handle JavaScript-rendered content?

Yes. With tools like Selenium, Puppeteer, or Playwright, crawlers can simulate a real browser, interact with the page, and extract dynamically loaded data.

Q4: How can I avoid getting blocked while crawling?

- Rotate IP addresses and user-agent headers

- Add delays between requests

- Use proxies or VPNs

- Avoid aggressive crawling patterns

- Honor rate limits and timeouts

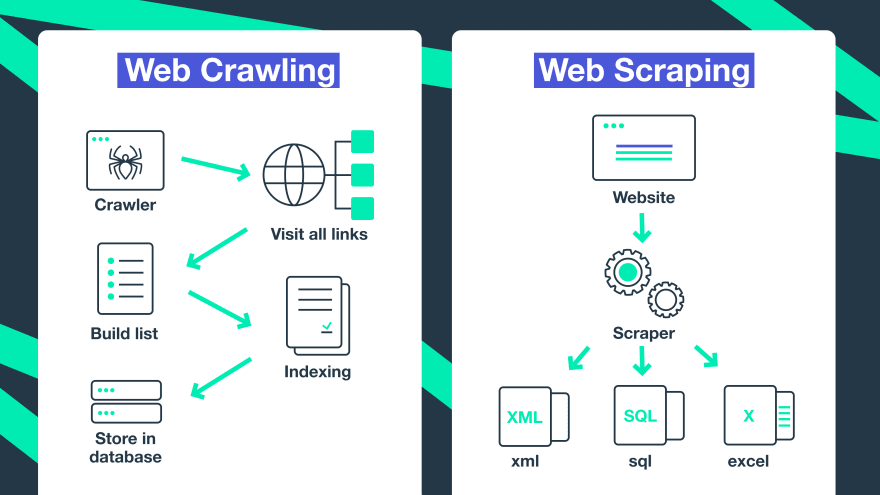

Q5: What’s the difference between crawling and scraping?

Crawling refers to the process of navigating through web pages and discovering content. Scraping is the act of extracting specific data from those pages. Lists crawlers do both: they crawl pages and then scrape structured data from lists.

Q6: Can I schedule a lists crawler to run automatically?

Yes. Most tools or frameworks support scheduled tasks using cron jobs, task queues, or built-in scheduling features. This allows for hourly,

ChatGPT said:

daily, or weekly data updates.

Q7: How do I store data collected by a lists crawler?

Common options include CSV/Excel files for small datasets, SQL or NoSQL databases for larger collections, or cloud storage services for scalability and sharing.

Conclusion

Lists crawlers are indispensable in today’s data-driven world. They unlock valuable structured data hidden inside websites and make it accessible for business intelligence, research, marketing, and innovation.

Whether you build your own crawler or use a commercial tool, understanding the underlying principles and ethical considerations ensures you get reliable data without crossing legal or technical boundaries.

Harness the power of lists crawlers responsibly and watch your data collection and analysis efforts soar to new heights

Must Visit For More: infromednation

COMMENTS